How to Make Transformers More Efficient and Accurate by Streamlining Their Feed-Forward Networks

As we celebrate the scalability of Transformers, a parallel movement is underway to make these models not just bigger but also smarter, more efficient, and more practical for real-world applications. This involves addressing crucial issues like latency, memory usage, and disk space consumption.

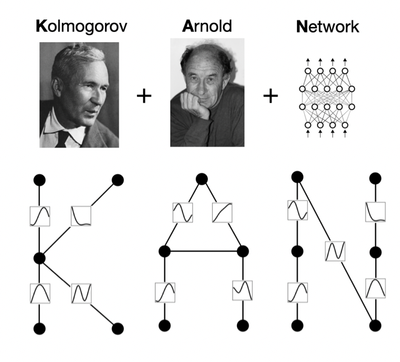

Researchers have been hard at work, exploring various methods to tackle these challenges. These methods include component optimization, parameter sharing, and dimensionality reduction. Among the essential components of the widely used Transformer architecture, the Feed Forward Network (FFN) and Attention mechanisms stand out.

Attention: Imagine a Transformer as a language-savvy detective. The Attention mechanism acts as its magnifying glass, allowing it to uncover intricate relationships and dependencies between words in a sentence, regardless of their positions. It helps the model decipher which parts of the input text are most relevant to each word it's currently analyzing. Understanding context and connections within phrases hinges on this vital mechanism.

Feed Forward Network (FFN): Picture the FFN as the Transformer's toolbox, filled with mathematical instruments for non-linearly transforming each input token independently. It adds complexity and nuance to the model's understanding of each word by performing specific mathematical operations on word representations.

Recent research has zeroed in on the FFN's role within the Transformer architecture. What these researchers discovered was intriguing: the FFN, despite being a substantial component of the model with a significant parameter footprint, harbors redundancy. Remarkably, they found a way to trim down the model's parameter count without significantly sacrificing accuracy.

Their strategy? Removing the FFN from the decoder layers and instead employing a single shared FFN across the encoder layers.

Decoder Layers: In a standard Transformer model, each encoder and decoder has its own FFN. The researchers excised the FFN from the decoder layers.

Encoder Layers: Instead of individual FFNs for each encoder layer, they utilized a single FFN shared across all encoder layers.

The results? Let's delve into the benefits:

Parameter Reduction: By discarding and sharing the FFN components, they achieved a dramatic reduction in the model's parameters.

Modest Accuracy Drop: Despite removing a considerable number of parameters, the model's accuracy only dipped slightly. This suggests that the multiple FFNs in the encoder and the decoder exhibit some degree of functional overlap.

Scaling Back: To maintain or enhance the model's performance, they expanded the hidden dimension of the shared FFN. This effectively restored the architecture to its original size. The outcome? Significant improvements in accuracy and model processing speed, reducing latency.

This research unveils an exciting frontier in Transformer design. The Feed Forward Network, especially within the decoder layers, can be streamlined and shared without compromising model performance. This not only lightens the computational load on the model but also enhances its versatility and applicability across diverse NLP applications. In collaboration with Apple and Equall AI, this innovation promises to reshape the landscape of AI-driven natural language processing.

Read More:

We research, curate, and publish daily updates from the field of AI. A paid subscription gives you access to paid articles, a platform to build your own generative AI tools, invitations to closed events, and open-source tools.

Consider becoming a paying subscriber to get the latest!

No spam, no sharing to third party. Only you and me.