Artificial Intelligence

How to Make Transformers More Efficient and Accurate by Streamlining Their Feed-Forward Networks

As we celebrate the scalability of Transformers, a parallel movement is underway to make these models not just bigger but also smarter, more efficient, and more practical for real-world applications. This involves addressing crucial issues like latency, memory usage, and disk space consumption. Researchers have been hard at work, exploring...

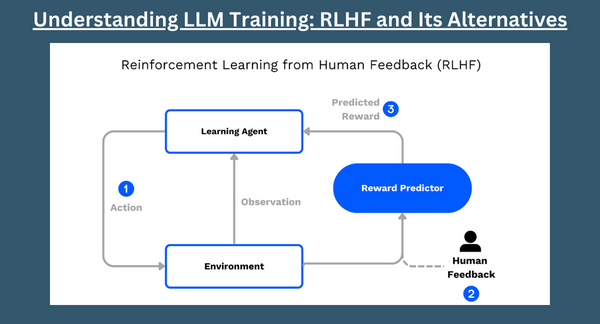

Understanding LLM Training: RLHF and Its Alternatives

Large Language Models (LLMs) have made considerable changes in the way we interact with AI-powered systems, from chatbots to content generation. One crucial aspect of LLM development is their training process, which includes Reinforcement Learning with Human Feedback (RLHF) as a key component. In this informative article, the training process...

NExT-GPT's Journey into the Multimodal Future

A 'modality' refers to a particular mode or method of communication or representation. Modalities can encompass different ways in which information is presented or perceived, such as text, images, videos, audio, or any other format that conveys information. In the context of multimodal AI systems, these models are designed to...

Understanding LLM Training: RLHF and Its Alternatives

Large Language Models (LLMs) have made considerable changes in the way we interact with AI-powered systems, from chatbots to content generation. One crucial aspect of LLM development is their training process, which includes Reinforcement Learning with Human Feedback (RLHF) as a key component. In this informative article, the training process...

Harnessing the Power of GPT-3.5 Turbo: Fine-Tuning and API Advancements

Customize Your AI: Personalize GPT-3.5 Turbo for exceptional Results In the ever-evolving landscape of artificial intelligence, OpenAI has once again raised the bar with exciting updates to its GPT-3.5 Turbo model. Developers and businesses can now embark on a journey of customization, crafting AI models that not only...